Long ago, I installed Longhorn onto my Kubernetes cluster using Helm 2. Then eventually Helm 3 was released and helm 2to3 was made available. However, I was not able to use helm 2to3 for whatever reason because Rancher didn’t deploy Tiller in the way that this CLI expected. Additionally, Rancher did not provide an upgrade mechanism to handle this. Eventually Rancher 2.6 was released which entirely dropped Helm 2 support and I was stuck with a cluster where Longhorn was deployed, but not managed by a working Helm installation.

I recently responded to the Log4j vulnerability. If you’re not aware, Log4j is a very popular Java logging library used in many Java applications. There was a vulnerability where malicious actors could remotely take control of your computer by submitting a specially crafted request parameter that gets directly logged to log4j.

This situation was not ideal since I was running several Java applications on my servers, thus I decided to use Nmap to port scan my dedicated server to see what ports were open. I ended up finding a number of ports I didn’t expect because several of Kubernetes Service instances were being mapped as node ports.

Over the past year I helped a few people pick mortgages while buying their homes by helping them visualize different mortgage options from different companies. In a seller’s market, like where I live, you only get a few days to pick from a number of different mortgages that all offer different fees, points, and interest rates that all influence the monthly rate that you pay.

Given all this data, how do you compare the difference options and decide which one to go with? The lowest monthly rate isn’t always the best option.

In previous posts, I leveraged the MACvlan CNI to provide the networking to forward packets between containers and the rest of my network, however I ran into several issues rooted from the fact that MACvlan traffic bypasses several parts of the host’s IP stack including conntrack and IPTables. This conflicted with how Kubernetes expects to handle routing and meant we had to bypass and modify IPTables chains to get it to work.

In the previous post (DHCP IPAM), we successfully got our containers running with macvlan + DHCP. I additionally installed MetalLB and everything seemingly worked, however when I tried to retroactively add this to my existing Kubernetes home lab cluster already running Calico, I was not able to access the Metallb service. All connections were timing out.

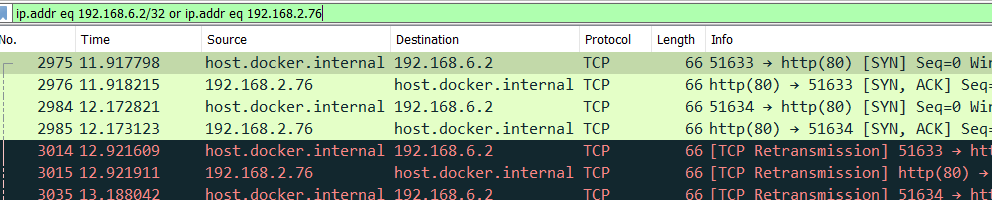

A quick Wireshark packet capture of the situation exposed this problem: