COE = Correction of Error

My previous employer, Amazon, was a big proponent of doing blameless analysis of outages and figuring out what could be done to fix it. I recently had an outage on my servers and wanted to share what went wrong and the fix.

Summary

Starting Thursday until Friday, all TLS requests to a *.technowizardry.net domain would have failed due to a TLS certificate expiration error. Then on Friday, all DNS queries to a *.technowizardry.net zone failed which also caused mail delivery to fail too. This happened because cert-manager had created the acme-challenge TXT record, but the record was not visible to the Internet because the HE DNS was failing to perform an AXFR Zone Transfer from my authoritative DNS server. This was because PowerDNS was unable to bind to port :53 because systemd-resolved was already listening on that port.

While trying to fix the issue, I temporarily deleting the entire zone in Hurricane Electric which then triggered an issue how PowerDNS handles ALIAS records that blocked further AXFR transfers from succeeding. This required manual effort to unblock the transfer to let the zone start working again and allowed the TLS certificate to be renewed.

Additionally, Dovecot, which handles mail storage, failed to start after being rebooted because a Kubernetes sidecar container couldn’t start because of a “too many open files” error while setting up an inotify (to listen for file changes) because of a low ulimit.

Background

DNS

On each of my three servers, I run the PowerDNS authoritative server storing zone records in MySQL. I use cert-manager to create and renew TLS certificates from Let’s Encrypt in my Kubernetes cluster. Since I use wildcard certificates, Let’s Encrypt requires me to use the DNS verification method which means cert-manager has to have permissions to create and modify TXT records. It creates records in PowerDNS using the API.

I have three dedicated servers running my Kubernetes cluster. Three is chosen to ensure that if one machine is offline, I can continue to serve critical services, like this blog. However, they are located geographically all in the north-east area of the US and Canada. For performance, I wanted to distribute out the DNS servers so they’re faster. Thus, I adopted Hurricane Electric’s DNS service to serve as authoritative DNS servers. These servers automatically perform what’s called an DNS Zone Transfer or an AXFR from my servers to their servers to keep in sync.

When cert-manager updates a record using the API, PowerDNS automatically updates the SOA record to tell other secondary resolvers that there’s an update. As of now, my SOA record looks like:

| |

HE DNS checks for any changes to the zone serial number on my server, and if mine is newer, it pulls the newest version.

I use Dovecot to store email and implement IMAP/POP3. This is running in Kubernetes and is exposed as a Kubernetes service. It’s not configured in a Highly Available mode and only has one instance running because I never spent the time to figure out Dovecot HA. I then expose the port using ingress-nginx’s mechanism to expose TCP services.

Running as a side-car, I have a config-reloader container which runs quietly and listens for changes in the ConfigMap configuration files or the SSL certificate and if a change is detected, it sends a SIGHUP to Dovecot to reload the configuration. This allows me to avoid having to fully delete and restart the service to make config changes and automatically handle SSL certificate renewals. The deployment looks like this:

| |

Investigation

DNS

I was on vacation with limited access to the Internet so it took a lot longer than it should have to figure it out and fix the problem.

First thing to check is server logs. cert-manager showed:

| |

The PowerDNS pods showed:

| |

Comparing SOA records shows a difference. HE is not pulling updates.

| |

Without SSH access and using an eSIM with a very limited data cap, I can’t investigate what’s listening, but it’s definitely the systemd-resolved. In the mean time, I use Hurricane Electric’s website to change from a secondary DNS zone type to a primary zone which means I can edit the records in the website and skip AXFR. A day later I had full Internet access and could get to work. I wanted to switch back to using the DNS Zone transfer mechanism and have Hurricane Electric pull from my PowerDNS, but they kept failing to pull my server. I couldn’t figure out why because the server was responding to some queries.

Manually trying to pull the AXFR zone didn’t succeed and gave no error messages back. I use TSIG to authenticate transfers. I double and triple-checked that it was correct.

| |

The server logs gave no error messages as to why. In the pdns.conf, I changed the logging level from 3 to 5 to increase logging messages.

| |

Then retried and got this:

| |

Ah ha. I’m using an ALIAS record type on apex record, technowizardry.net because I can’t use a CNAME record (CNAME tells resolvers to go look at another record to find the value) CNAMEs can’t be used on the root domain because of “reasons” that are boring, legacy, and unfortunate. I use these records because I point everything to a common record ingress-nginx.technowizardry.net which includes all three servers. As I do maintenance, I take them out and put them back in that single record.

Unfortunately, it seems that even though technowizardry.net is an ALIAS to ingress-nginx.technowizardry.net which this server knows about, it ends up trying to query a resolver on the Internet for that value. However, in this case, no server (which is my HE DNS) is able to handle that query because they’re failing to AXFR transfer. Thus we have a circular loop.

I consider this to be a bug in PowerDNS given that it can absolutely handle this query. However, I suspect they may not have implemented this to avoid issues where somebody creates an ALIAS on a subdomain that is on another NS server.

I quickly disable all ALIAS records to get back in business.

| |

After that the DNS zone transfer succeeds. I can re-enable the ALIAS records. Eventually, the TLS certificate renewal succeeds and we’re mostly golden.

Except for mail is not coming back. The pod is marked as unhealthy which means that ingress-nginx won’t forward traffic to it. However, Dovecot itself is fine. It’s the config reloader that’s restarting with a “too many open files” error. It only opens like five files. How could that be. Well, it’s the only container that uses Inotify which is a Linux feature that sends notifications when files are updated. This one was interesting. Some GitHub issues talk about this, but it’s unclear. My intuition suggests it’s a ulimit or sysctl issue causing it to be unable to startup. Coincidentally, the pod is running on my node that is running NixOS. The other two haven’t been changed over yet.

| |

The limit was pretty low. That’s the culprit.

Why

The next section is asking Five whys to get to the bottom. I’ll break it down to different problems.

Why didn’t cert-manager renew the certificate?

- cert-manager was unable to renew certificates. Why?

- cert-manager was waiting for the acme-challenge TXT record to be available? Why wasn’t it available?

- cert-manager had created the TXT record in the authoritative PowerDNS instance, but the NS servers listed on the technowizardry.net zone (run by Hurricane Electric) did not have the TXT record. Why didn’t they have the record?

- Hurricane Electric failed to perform a DNS zone transfer. Why couldn’t they transfer?

- The DNS query was not making it to PowerDNS which could handle the transfer. Why?

- PowerDNS wasn’t able to bind to the port because systemd-resolved was already listening. Why wasn’t it already disabled?

- Not sure. Maybe an OS upgrade reverted my change or maybe I had only temp disabled it.

Why didn’t mail services start to work?

- The dovecot service wasn’t starting up. Why?

- Because the config-reloader side-car was crash looping. Why?

- Because it was unable to setup the inotify that was needed. Why?

- The sysctl was set to low. Why?

- It was never changed from the default. Why?

- First time I hit this problem

Action Items

Disable systemd-resolved resolver

The first action is to disable the systemd-resolved local resolver which listens on the same port. My servers then forwards DNS off host instead of using a local cache.

On NixOS, this can done using:

| |

And on other machines, it’s like:

| |

Increase inotify limits

Next, we need to increase the sysctl limits. The Nix team discussed increasing this limit by default, but the related PR was abandoned.

| |

Add more monitoring

I’m using kube-prometheus-stack to monitor my cluster. I didn’t know my certificate was failing to renew for over a week. Had I known, I could have fixed it ahead of the expiration. Let’s add an alert so I get an email next time.

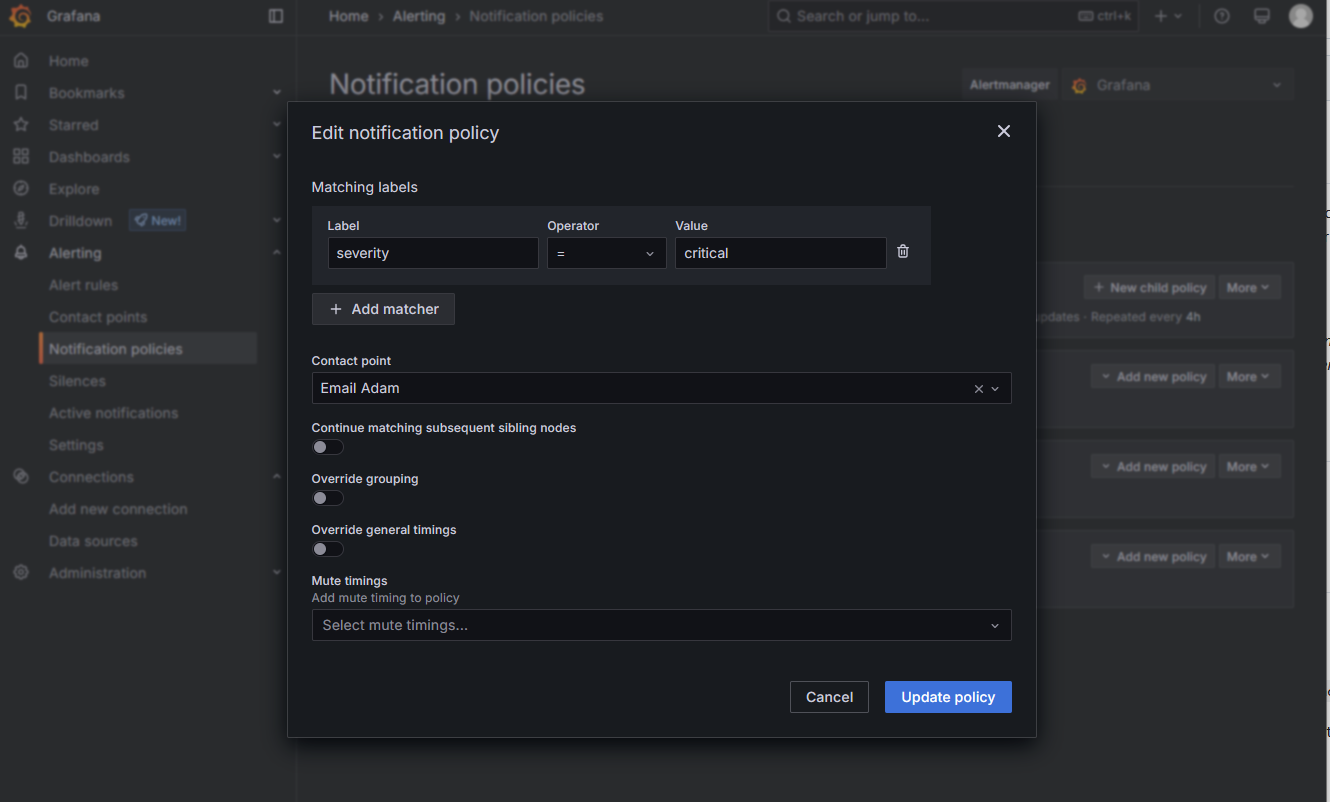

Here I create a PrometheusRule object which tells Prometheus’ AlarmManager to start watching for this metric expression to trigger.

| |

I use Grafana dashboards to visualize and send alerts. I configured a contact point that sends me an email and a notification policy to catch the above alarm.