Previously in my Home Lab series, I described how my home lab Kubernetes clusters runs with a DHCP CNI–all pods get an IP address on the same layer 2 network as the rest of my home and an IP from DHCP. This enabled me to run certain software that needed this like Home Assistant which wanted to be able to do mDNS and send broadcast packets to discover device.

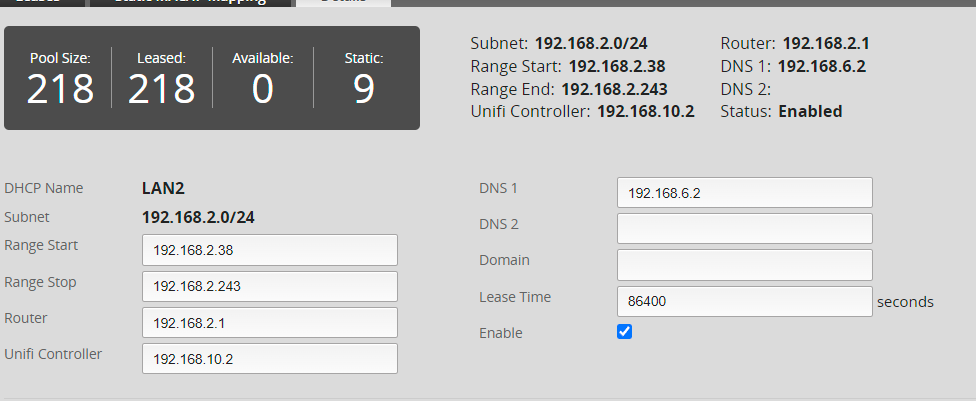

However, not all pods actually needed to be on the same layer 2 network and lead to a few situations where I ran out of IP addresses on the DHCP server and couldn’t connect any new devices until reservations expired:

My DHCP IP pool completely out of addresses to give to clients

I also had a circular dependency where the main VLAN told clients to use a DNS server that was running in Kubernetes. If I had to reboot the cluster, my Kubernetes cluster could get stuck starting because it tried to query a DNS server that wasn’t started yet (For simplicity, I use DHCP for everything instead of static config).

In this post, I explain how I built a new home lab cluster with K3s and used Multus to run both Calico and my custom Bridge+DHCP CNI so that only pods that need layer 2 access get access.

I wanted to move the K8s pods into a separate IP pool and VLAN so I could reduce the blast radius of something going wrong.

If you’re trying to start a K3s cluster (like I am) from scratch, then you may run into issues where the K3s cluster that Rancher provisions won’t start without a working CNI. If this happens, check out my other post on how to install the CNI.

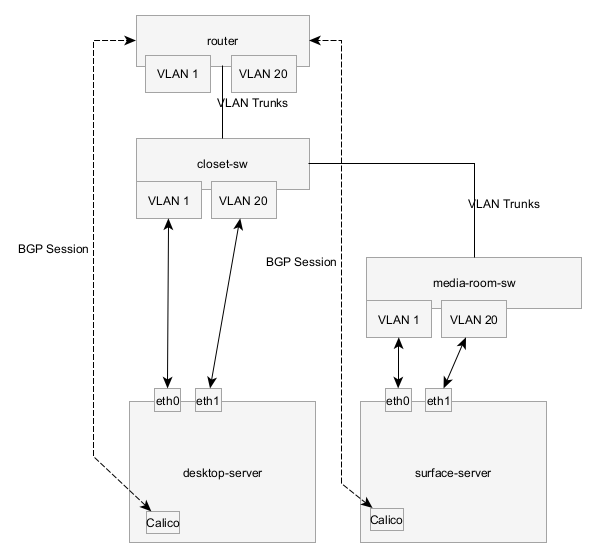

Network Configuration

I created a new VLAN (ID 20) and trunked this VLAN to my router and all switches and configured the router as a DHCP server and enabled it to route traffic between VLANs and the internet.

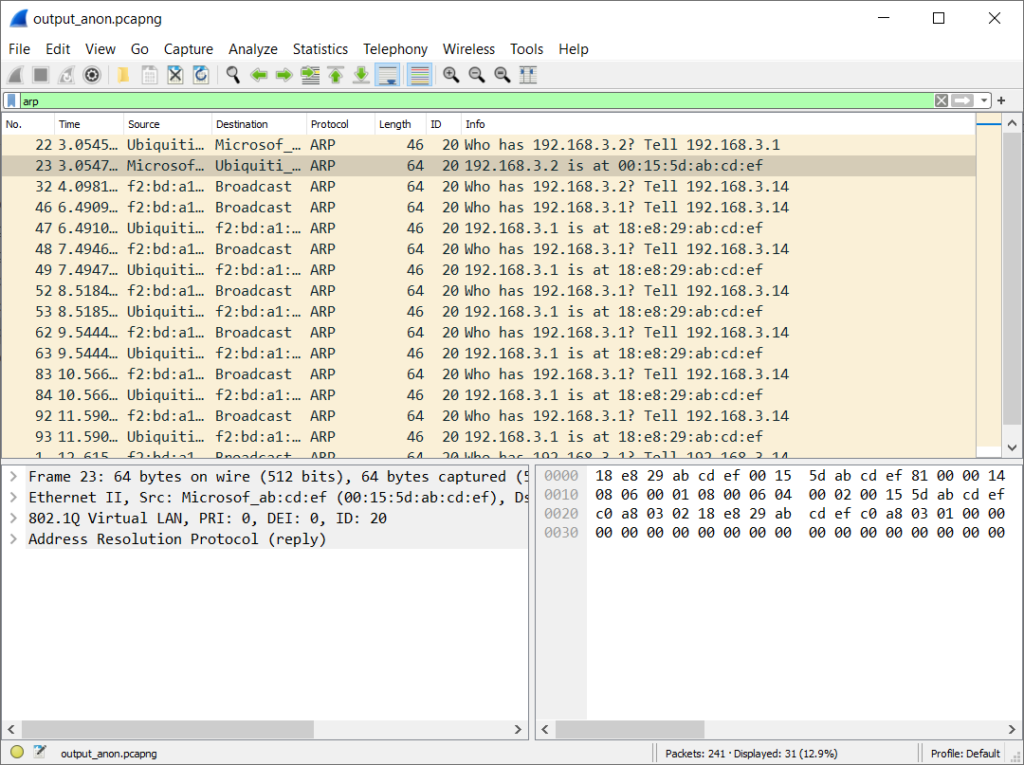

I tried trunking the VLAN 20 (and left the default VLAN as untagged) to both computers, however I ran in to an issue where the Surface Dock 2 Ethernet adapter wouldn’t work because it couldn’t receive ARP packets from certain devices on the network on the VLAN tagged adapter. This didn’t make any sense because it was able to get an IP address from DHCP.

The router wasn’t able to send ARP responses/queries to the VM, but other machines on the network were able to. Logically the only difference I saw was the packet lengths, packets were always less than 64 bytes, but Ethernet is supposed to pad all bytes to a minimum of 64 bytes. This didn’t make any sense, so instead I bought a USB-Ethernet adapter for this computer and used that for my secondary network.

A packet capture from a tap on the Ethernet cable showing packets, but the responses never made it to the IP stack in the VM

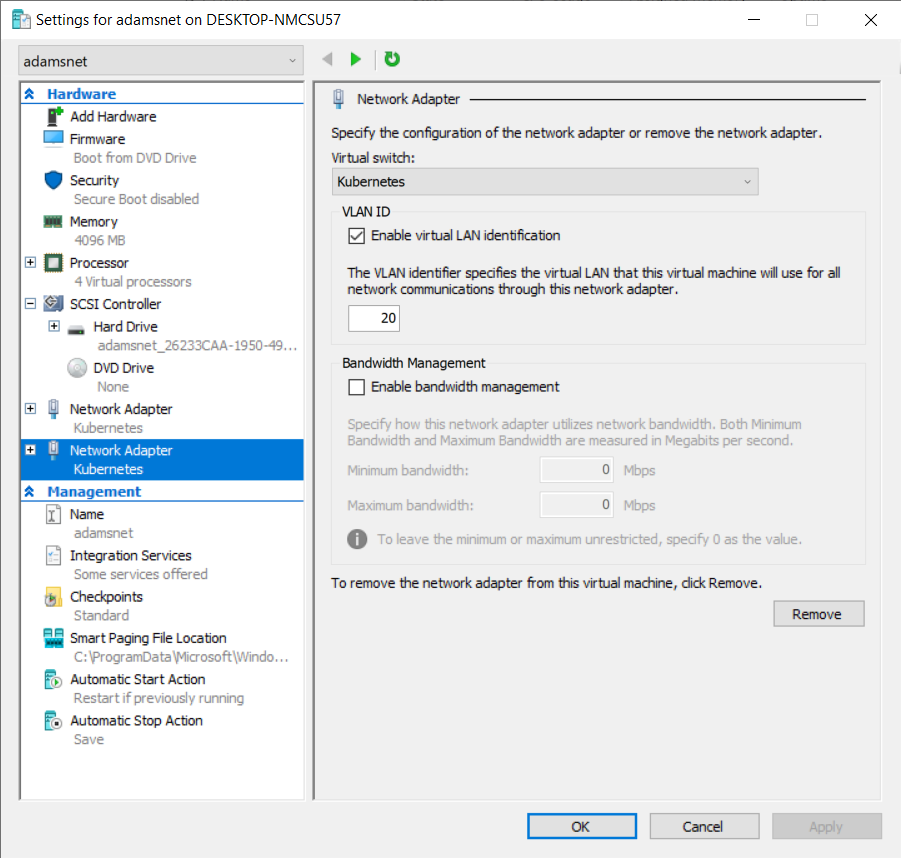

VM Network Adapter Configuration

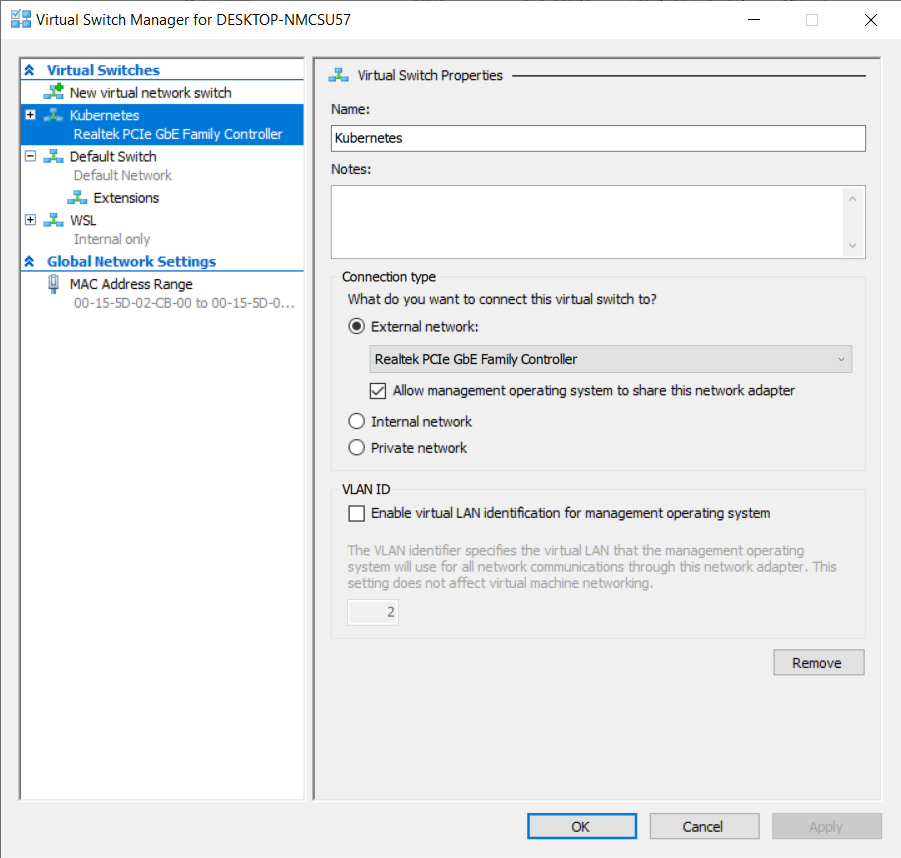

I’m running my Kubernetes nodes as a Hyper-V VM on two of my Windows computers.

I previously created a virtual switch (See part #1 if you’re interested in the step by step) for Kubernetes that references my Ethernet adapter:

Then I created a virtual machine that includes two network adapters, both of them bound to that same switch, but one of them included the VLAN Id 20

Cluster Configuration

I’m using Rancher’s UI to provision a k3s cluster. By default K3s uses Flannel for it’s networking CNI, but I want Multus so I can use Calico. While creating the cluster, set the agent Env variable, INSTALL_K3S_EXEC to be --flannel-backend=none --disable-network-policy to deploy without the default Flannel cluster, then follow this guide.

| |

OS Network Configuration

Once Linux is booted in the VM, we can setup the network adapters inside the VM. For more details, see my post on Bridge + Systemd.

| |

The eth1 adapter should be bound to the VLAN 20 network adapter

| |

Now I end up with the following route table:

| |

Intro to Multus

Multus is a special CNI that enables you to configure one or more network interfaces on a pod’s network namespace. Each pod always gets the default cluster network/master plugin interface. Then pods can add a special annotation to get more network adapters.

I’m going to use Calico as my cluster network plugin because it supports BGP and is what I was already using based on previous posts in my series.

My DHCP CNI will be the optional secondary network attachment.

Installing Multus

Unfortunately, Multus doesn’t currently provide any Helm templates. Instead they only provide a YAML file that needs to be modified because it can be used.

First, download the multus-daemonset.yml from their GitHub repository and save it.

Find the ConfigMap that defines multus-cni-config. This is what defines the primary network plugin.

Since I’m using Calico, I used the following ConfigMap:

| |

Then find the kube-multus-ds DaemonSet and change both the args and the volumes section to look like below. This forces Multus to run before Calico

| |

Install Calico

Now you can install Calico as you normally would. I’ve already got a guide on how I configure Calico in my network here.

When installing using the Tigera Operator, make sure to configure the nodeAddressAutodetectionV4/V6 settings to use the VLAN 20 (in my case eth1.)

| |

After installing Calico, the cluster should start up correctly and you should be able to launch pods with at least internet connectivity. Next, we need to configure the layer 2 network CNI.

Install the Layer 2 Bridge CNI

Now install the DHCP CNI and DaemonSet that I’ve been working on in previous posts (see here):

github.com/ajacques/cni-plugins/…/dhcp/k8s.yaml:

kubectl apply -f https://raw.githubusercontent.com/ajacques/cni-plugins/bridge/plugins/ipam/dhcp/k8s.yaml

Then create a Multus NetworkAttachment:

| |

Updating the Deployment

Configuring the deployment to use dual network adapters is easy, add the annotation to the pod annotations not the deployment annotations:

| |

Solving Network Routing Problems

As I encountered previously in the series (in Part 5) the containers have an entirely separate route table

| |

When HomeAssistant tries to send a Wake On Lan packet to turn on my TV at IP address 192.168.2.xy it needs to send a packet to 192.168.2.255. But now our traffic isn’t making it directly out onto the layer 2 network. It matches the default route and goes through the host which is prevents broadcast packets from being broadcast since it’s considered an layer 3 hop and multicast.

We need to tell the container that it can send traffic destined for the main LAN towards to cni0/eth0/VLAN 1 network adapter.

I tried creating a custom route by using the redhat-nfvpe/cni-route-override plugin:

| |

This allows the container to send traffic through cni0 onto the correct VLAN, but with the wrong source IP and it sends it as 192.168.7.xy (The Calico K8s Pod subnet.) The container route table looks like this:

| |

The route is missing a src 192.168.2.xyz to tell the Linux IP stack to use the right source IP address.

I see the same problem with mDNS traffic that HomeAssistant uses to discover devices on the local network. The following are DEBUG logs showing it’s creating a socket to 239.255.255.250, the multicast IP address for mDNS.

2022-04-17 00:19:51 DEBUG (MainThread) [async_upnp_client.ssdp] Creating socket, source: (<AddressFamily.AF_INET: 2>, <SocketKind.SOCK_DGRAM: 2>, 17, '192.168.7.253', ('192.168.7.253', 0)), target: (<AddressFamily.AF_INET: 2>, <SocketKind.SOCK_DGRAM: 2>, 17, '239.255.255.250', ('239.255.255.250', 1900))

Unfortunately, the route-override CNI plugin doesn’t allow us to define the source field on a defined route, so we have to define our own CNI plugin.

To figure out how to create the right rule, we need the subnet of the loocal network and the network adapter inside the container. Kubernetes stores all of the outputs from the CNI plugins in /var/lib/cni. If we inspect the output file, we can that this information will get passed into a custom CNI:

| |

The full code for the CNI is here. A break down:

First, we grab a reference to the network namespace for the container (CNI passes this in directly.) linkName will be the name of the container once we’re inside the container and containerNet is 192.168.2.158/24.

| |

Then swap inside the container network namespace and get a reference to adapter:

| |

Next, we need to convert the IP address 192.168.2.158/24 to 192.168.2.0/24 since Linux prohibits the former to be used as part of a route and then add it as a route.

| |

Then create a similar route for multicast traffic:

| |

This is all taken care of if you use the Docker Image I wrote and update the network attachment:

| |

Now, if we redeploy HomeAssistant it successfully discovers devices on my LAN!

Conclusion

In this post, I pulled together several different techniques I applied in previous posts in this series showing how to use Multus to run both Calico and Bridge+DHCP CNIs at the same time. Calico enables us to isolate traffic to a separate VLAN and avoid consuming all the IP addresses in the LAN and Multus with the bridge CNI ensures that software like HomeAssistant, my Sonos control software, and software that uses mDNS can continue to discover devices like they should.