Other titles:

- You were supposed to bring balance to Kubernetes, Rancher, not destroy it

- et tu? Rancher?

I’ve been maintaining my own dedicated servers for around 7 years now as a way to learn and improve skills and have a place to run my different web sites, mail servers, even this blog. Over the years the hardware has changed and I’ve moved from hosting Rails applications directly on the OS to Docker and finally Kubernetes. I’ve learned a lot of skills that eventually helped me in my professional career at my job that it’s definitely been worth it, but maintaining this server has had its massive pain points where I’ve just had to walk away and leave stuff broken for days until I finally fix the issues.

I selected Rancher several years ago (at least 3 or 4 years ago I’d estimate) when I finally moved to Kubernetes. I liked how it automatically provisioned my clusters, managed networking, and provided a nice UI. It was also reasonably recommended by the internet. Things worked reasonably well, but after adopting Rancher and Kubernetes, every 6-12 months I’d end up having something massively break and I’d have to rebuild the entire Kubernetes cluster painstakingly and many times I’d tell myself if it broke, then I’d just swear off Rancher entirely, but it never happened because I eventually got everything working.

After upgrading to Rancher v2.6.3 that just launched yesterday and finding that all my clusters were removed from Rancher, I hit my breaking point.

Maybe this was just because Rancher wasn’t designed for single node clusters, but from my experience working at my employer where we had much larger clusters, problems just become harder when you’re working with distributed systems.

Maybe it was because I didn’t know what I was doing, but I consider myself to be somewhat knowledgeable and skilled in different aspects of software engineering. I’ve designed and developed software in big data processing pipelines, services, web applications, etc. I’ve worked in different paradigms. Does this give me sufficient credentials? Not really, but I feel confident saying this is not 100% operator error. If there’s any software that needs to focus on reliability and correctness, it’s orchestration software.

Why do I claim Rancher is painful and unreliable?

Rancher v2.6.3 upgrade

I upgraded from Rancher v2.6.2 to v2.6.3 that just launched. As soon as it came up I found that all my clusters were just missing. The clusters were still running, but Rancher had lost communication with them so I could no-longer manage them. Luckily I took an etcd backup right before, but apparently this was insufficient since Rancher made several other changes to /var/lib/rancher meaning that restoring this snapshot prevented the Rancher server container from coming up.

If I recall it was an TLS certificate issue with etcd, but I only restored the snapshot, why did this break? I ended up having to go even farther back for a backup I had made of /var/lib/rancher from a previous time Rancher crashed, using that, then restoring the etcd snapshot which worked. Rancher does put out a guide on backing up Rancher which I am planning on testing out for the next time.

However the main problem was that Rancher completely lost all my clusters when upgrading a single 0.0.1 version bump. Maybe I was just unlucky and hit a rare edge case, but then again based on my experience, it doesn’t seem like it, and looking through the GitHub issues, I am not alone: 1, 2.

Multiple Rancher cluster losses

Over the years, I’ve had to rebuild the cluster from scratch maybe 3-4 times. Something will break (I unfortunately don’t recall the details,) and I’ll have to provision a brand new cluster and create all my applications again. And it’s always painful. Sometimes I’ll have the previous etcd snapshot folder that I can copy the Kubernetes YAML files out and directly reapply this, but this is extremely onerous.

In fact, this happened right before my birthday one yeah and I had to rebuild everything from scratch.

I enabled backups on my clusters too, but they don’t always help. My install is configured where Rancher has a local cluster and my main cluster. This is the standard single-node install. Unfortunately this means that if that management state gets lost and the cluster is broken, then you can’t restore a cluster from a backup, because the Rancher management install won’t know about this downstream cluster and you’re stuck.

However, I haven’t experienced a full cluster outage in about 2 years looking at the age of this cluster. Maybe I got better at preventing this, but if it does happen again I’m not sure if the built in backup feature is going to be sufficient to recover from all failures if the cluster doesn’t boot.

Rancher + Helm = Sadness 😢

I eventually upgraded to Rancher v2.6.0 from v2.5.x and I found several issues with Helm.

Helm 2 support was completely removed. I’m not frustrated that it was removed, I know Helm 2 is old, but the helm 2to3 CLI didn’t work for me in Rancher. It never found the Tiller so I couldn’t upgrade anything. I ended up manually deleting and recreating a few Helm applications entirely which was intrusive, but I didn’t mind.

But I was also running Longhorn. Longhorn is Rancher’s block storage CSI solution, which is useful when it works. Unfortunately, I found no Longhorn guidance on how to handle this, with even one Longhorn engineer saying “to use the old UI”. This isn’t a good solution, is everybody supposed to continue switching back and forth forever? Is there a migration plan coming? Any guidance whatsoever?

Ultimately, I looked around more and found I could modify the resources to let Helm 3 run overtop of the existing install and I got it working, but the lack of any solution for people that have been running Longhorn for a while (even keeping up to date with everything else) was frustrating.

Separate from that, the entire Rancher Helm UI is frustrating and broken. In fact I have two separate Helm bugs: 1 and 2.

In Rancher v2.6.x, if you modify an array Helm value, Rancher will merge any upstream changes and completely corrupt the values. I reported this issue in GitHub and separately in a Reddit thread where a Rancher employee responded, but after multiple back and forth responses, the Rancher employee didn’t seem to see that Rancher was responsible for this bug, not me. I explicitly did not want Rancher to merge arrays, but this was the UI’s fault. There’s no workaround in the UI if you hit this. I’ve hit this with external-dns, CoreDNS, Prometheus. The only solution is to either use the Helm CLI or remember to fix up the values every time through the UI.

The Rancher Helm UI does not make it easy to know what values are changing or what values I’ve overridden, but in that communication with a Rancher employee they were confident just showing a toggle showing my values was sufficient. Users performing upgrades need a lot more context and I wanted to see:

- A diff between the upstream values from vPrev to vNext and present this in a UI for the user to review and decide

- Visually separate the overridden values from the upstream values so I can understand what I’ve actually changed

- Highlight conflicts between my overridden values and changes in the upstream values

Rancher doesn’t even show you the comments from the app’s values.yaml because they made the choice to store the values as JSON.

There’s more issues too. If you try to upgrade a Helm application while it’s currently in an upgrade, what version of values does it use? Upgrades can get stuck with a failed Helm operation and Rancher will just reject the upgrade and you’ll lose all your value changes. If there’s a Rancher questions.yaml in the Chart (I love these because it makes it easy for myself to set values) and you have custom values, Rancher will just silently delete values and you’ll end up with a broken install.

Right now Rancher Helm UI is basically just an upgrade and pray and hope everything works. They need to cohesively rethink the experience to provide me, the user, with the tools and the confidence that it’s going to work. I even gave some suggestions in my GitHub issue and would love to give further feedback if Rancher wants it.

Rancher Pipeline Deprecation

The Rancher 2.6.0 release deprecated Rancher Pipelines and instead implemented Rancher Fleets with Continuous Delivery feature. Their documentation states: “Fleet does not replace Rancher pipelines; the distinction is that Rancher pipelines are now powered by Fleet”, but it also says: “Pipelines in Kubernetes 1.21+ are no longer supported.” That seems contradictory.

Am I allowed to use my pipelines that I created before or do I need to go somewhere else? I tried adding my repository to the Rancher Continuous Delivery UI and it did…nothing. No feedback for several minutes until: “Request entity too large: limit is 3145728” What’s wrong? I don’t know. Is the pipelines YAML still supported or not?

Rancher Pipelines was simple. My use case was just kick off a Docker build for a GitHub webhook, push to the Rancher registry, then deploy the latest version. But Rancher Fleet seems far more complicated.

So instead, I went to GitHub actions which I didn’t like because now every simple repository needs to have GitHub secrets to push to my Docker Hub and a Kube Config to my cluster.

Update Jan-24-22: I have started experimenting with Rancher Fleets to see if it can win my heart again after extensively reading through the docs and fleet-examples repository. I managed to get it working successfully. Turns out I needed a separate pipeline just to contain the YAML files. However, it still doesn’t solve the same itch that Rancher Pipeline solved. It doesn’t seem to handle the Docker build aspect so now I had to start using GitHub actions which requires me to add K8s secrets to every repository. Less fun.

Fleets give indecipherable error messages. For example, I didn’t add a dependency from one fleet.yaml to another. It failed, then I tried to add a dependsOn, but force updating the repo didn’t work. I had to delete it, then recreate the entire GitRepo. As soon as it’s in an error state, it doesn’t want to recover.

Longhorn Wonkiness

In theory, Longhorn is great. It was simple to deploy and start using. I didn’t have to deploy anything like Gluster or custom NFS servers or anything. I loved it so I started using it, but then I’d encounter random issues.

For example, I had a PVC deployed where I used subPaths to mount folders in a copy different places. This worked fine, until randomly after an upgrade of *something* all the subPaths were just empty. I’d mount the PVC in maintenance mode onto a host and take a look and I could directly see the different folders exactly as I expected with all the files, but then when Kubernetes mounts it into the pod, each folder was empty. I was specifically doing this so a temp folder in the pod didn’t get stored permanently. Instead, I had to mount the entire folder with no subPaths and it worked (less ideally.) Does this always happen? No. But I never found the root cause so I was forced to go with this fix.

Backups and recurring jobs were changed in the latest version. Now they seem to be separate concepts. You can delete a recurring job, but that doesn’t warn you that backups are linked to that job and the backup doesn’t seem to run.

I have a backup scheduled for every day, but looking at the history I see only backups from 4 days and 16 hours ago. Where are the backups in between that? I even have retain set to 6, so the last 6 backups should be available.

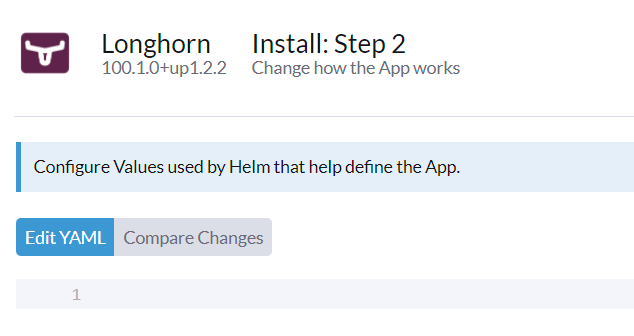

Don’t even get me started when I tried to install Longhorn on my home lab K8s cluster and apparently it failed to install giving the ever helpful “failed to wait for roles to be populated” error message, then being in a bad state where I have to explicitly delete the longhorn-system namespace. Apparently my cluster was still provisioning even though I had been running this cluster for months and only restarted the master an hour earlier. Then when attempting to install the Helm template, I’d get prompted with an empty values tab and it’d break.

I had to dig around in the Kubernetes resources to manually cleanup all resources and somehow magically I got it working. The frustrating that there was minimal feedback to know why it failed.

How do services work?

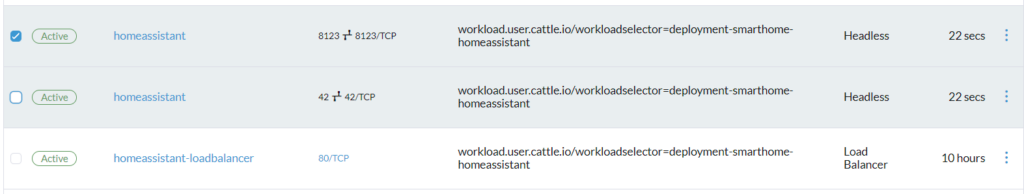

I do understand how to create services directly using YAML in Kubernetes and link Ingresses to them, but Rancher has a UI component that enables you to create ports for a deployment. This seems to create services sometimes? Sometimes I end up with two services that have the exact same name. This UI is confusing. Sometimes I delete a service directly, but then it just gets recreated with no feedback whatsoever.

Selecting “Do not create a service” seems like it wouldn’t create a service, but if there’s already a service that exists (like an LB), then it changes to match that service. I get validation errors if I try to create two services with the same port, but then somehow the services end up getting created anyway. I should be able to create Headless services from here too. If there’s multiple services (both an LB and a ClusterIP/Headless service) then editing the LB service seems to edit both services, even though the Deployment page only lists the LB.

Unfortunately, this UI component does not actually mean anything. Rancher seems to ignore it if services already exist, but a UI should not be surprising. It should tell me exactly what’s going to happen.

Then there’s this annotation:

| |

This annotation seems to control the ports that appear in the UI here:

But this annotation also ended up preventing me from deleting an LB service until I modified this annotation. Additionally, these links frequently show the wrong URL. Even though there’s an ingress configured and that ingress appears in that annotation, it still only shows 192.168.6.0 and https://192.168.6.8.

Deleting the ports block in the deployment and the annotation results in duplicate services:

There’s probably some simple bugs behind here, but they end up causing the user experience to be incredibly confusing. I don’t always have issues with

Rancher Role Change

Due to some issues I encountered whenever I’d reboot my primary server and the cluster not coming back up without some manual intervention, I eventually decided to get a 3rd dedicated server and run etcd/control plane across all 3 nodes so the Kubernetes control plane could continue running while one machine was rebooted. I was previously running a node as just a worker since I know that etcd doesn’t work well with only two nodes (due to the lack of majority.)

In another test cluster, I had a two etcd cluster (just for testing) and I stopped one of the VMs to test removal of that node, but RKE1 simply failed because the etcd on the remaining node would continually restart trying to connect. I had to take an etcd snapshot and restore it manually to recover it.

Back to the main problem, since there’s no change node role option, I removed the node from Rancher and went to re-provision it with all roles. It eventually came up, then a few minutes later I’d come back and find all Kubernetes containers were just deleted (etcd, kube-apiserver, kube-controller-manager.) I retried several times by deleting the node, then reprovisioned, each time it would work for a few minutes, then get deleted. Finally I figured out that there was a Job scheduled on that node to uninstall everything, but because the node was already gone, the job got ran the next time the node came up. But why is the job being scheduled if the node doesn’t exist in Kubernetes? If the node gets reprovisioned, should Rancher cancel a previous cleanup job?

This was one is definitely an edge case, if it was just these kinds of issues, I would be more likely to ignore them, but considering the breadth of other issues I have with Rancher, it’s hard to overlook it.

Other Random Issues

Sure, Rancher v2.6.x is brand new and I’m sure there’s going to be bugs, but some of them are easy to hit. Examples:

- If you’re filtering for a namespace, clicking Workloads will open the Apps view

- Clicking workloads sometimes doesn’t go to all workloads and instead shows the view you’re currently seeing (e.g. Jobs, Deployments, Stateful Sets)

- I frequently experience missing left-nav links where I can’t click to view some resource without reloading the page

- Updating resources sometimes will give me an error (Cannot read properties of undefined (reading ‘management.cattle.io/ui-managed’), but then it’ll successfully save

- I often hit conflicts when saving changes to workloads because it changed versions, but my changes don’t conflict and sometimes they’re trying to fix a boot loop issue, so instead I have to really quickly set replicas to 0, then make my change and scale up. It should be possible to make patch changes in the UI

- The client-side UI randomly caches data and I have to refresh it periodically to clear out state.

- Creating a Helm application used to let you auto create a namespace and default the release name to the app name, it doesn’t any more. Now I have to manually go create a namespace first.

- I can’t delete a K3s cluster that is turned off because there seems to be a finalizer on the resource that blocks until it can communicate with the cluster so I have to manually modify the resources to delete it.

- The Edit YAML view sometimes provides the schema for fields you haven’t provided. This can be useful, but there are weird bugs if you try to uncomment a value. Sometimes you can’t recomment it out, sometimes it leads to incorrect YAML schema.

- I just tried to delete a path from an Ingress, but upon saving, nothing happened (no error feedback). I had to manually edit the YAML to delete the path

Summary

Rancher is, in theory, pretty cool which is why I’ve continued to use it in face of so many issues. I understand that orchestration systems are very challenging to implement correctly and I want to recognize the hard work of those who worked on it, but ultimately I’m hitting a point where I can’t trust Rancher to safely manage my own cluster. And if I can’t trust it on a simple cluster, am I going to recommend that an employer uses this product?

All through these events, I’ve had the opportunity to learn in depth how Kubernetes and Rancher both work, but is that what I wanted to spend my free-time on? Ehh not exactly. One cluster rebuild is enough.

Filing GitHub issues felt fruitless since the only issues I filed were ignored or in the case of my reported Helm issue was able to get somebody to look at it, they misunderstood it and assumed I was wrong. Or the issues are hard to reproduce or hard to get a relevant log statement to demonstrate. I get that they have 2k current open issues.