In the previous post, we end up abusing subnets and routing to get Calico to exist on the correct subnet, but what if we could get rid of Calico’s duplicate IPAM system and just depend on our existing DHCP server to handle reservations? In this post, we’re going to prototype a cluster that uses DHCP + layer 2 Linux bridging to avoid the complications outlined in Part 3.

The official CNI documentation describes two plugins that could be relevant.

With dhcp plugin the containers can get an IP allocated by a DHCP server already running on your network.

This avoids overlapping IPAM problems with the previous solution and means that the DHCP server already running on my network would be responsible for handing out IP addresses directly to the containers.

That handles IP address assignment, now we need to be able to switch packets to the correct container interface. The documentation references both macvlan and ipvlan as possible switching options. Comparing the different options, ipvlan will expose only a single MAC address whereas macvlan will assign separate MAC addresses per container and expose them to the rest of the network. Ipvlan is generally recommended only when you need a single MAC address, like when you’re binding to a Wi-Fi adapter which only permits one MAC address per station.

I created a new cluster in Rancher with a new VM following my previous blog posts, however in Rancher 2.6.1+ it seems that I am unable to access the cluster if there’s no CNI plugin installed on the cluster, so I instead use kubectl to connect to the cluster. This is possibly a regression from 2.6.0 and I need to get around to reporting it.

I didn’t find a k8s installer that would deploy and configure the macvlan + DHCP CNI correctly, so we’re going to need to do this manually. In a future blog post, I will package this up into a polished file that can be deployed. First, download the latest release of the CNI plugins from their GitHub releases page. Extract it to the host’s /opt/cni/bin folder, so you have /opt/cni/bin/dhcp.

Then create /etc/cni/net.d/15-bridge.conflist and reboot.

| |

After the host came up, the DHCP requests were not making it out to the network, but they were visible on the VM’s network interface:

| |

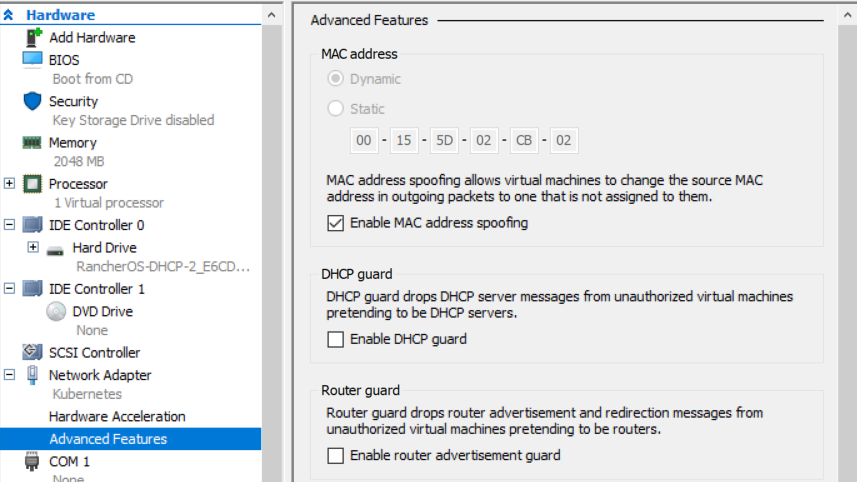

Some digging revealing that this is because I’m using macvlan which enables each container to use it’s own MAC address. Hyper-V was configured to block this for security. To fix this, check the “Enable MAC address spoofing” option in VM Settings > Network Adapter > Advanced Features. My understanding is that ipvlan may not require this option since it rewrites to use the VM’s MAC address.

Enabling MAC address spoofing in Hyper-V enables us to use macvlan, but could reduce security.

After that, I restarted the DHCP container and poof we had reservations:

| |

Containers were coming up with the right IP addresses, I was able to ping the containers from other computers, but I was not able to ping the containers from the host VM. This was odd. If anything I would have expected the reverse of this. Apparently this is expected behavior from a macvlan:

Irrespective of the mode used for the macvlan, there’s no connectivity from whatever uses the macvlan (eg a container) to the lower device. This is by design, and is due to the the way macvlan interfaces “hook into” their physical interface.

https://backreference.org/2014/03/20/some-notes-on-macvlanmacvtap/

This was also preventing kubelet from initializing the cluster:

| |

This is because macvlan by default does not route traffic from the container to the host. To fix this, we need to add the host interface into the bridge so containers can send traffic to it.

| |

The next problem I encountered was particularly insidious partly due to the fact that I was already running a K8s cluster in a separate VM.

In Kubernetes networking is complicated. The CNI is responsible for creating the network interface that each container uses, however Kubernetes also has something called kube-proxy which is responsible for exposing certain services, such as kube-dns and the kubernetes main HTTPS endpoint. Each container automatically gets a K8s service token and several environmental variables pointing it to the correct IP address:

| |

Note how it provides the 10.43.0.1 address for Kubernetes! This IP address doesn’t match anything that we’ve previous configured in any of the CNI configuration. Kube-proxy uses iptables to fake these IP addresses:

| |

However, macvlan is special because packets from containers don’t get processed by the host’s iptables rules. Thus, this iptables magic doesn’t work and the packet gets forwarded out to the physical network. In my case, I was already running a separate k8s cluster and my router was forwarding it to the old API gateway which lead

To fix this, I use the route-override CNI plugin to add a route for 10.43.0.0/16 to send it to the host’s IP chain where the iptables rules will apply. I downloaded this CNI plugin and extracted it to /opt/cni/bin/route-override. We add the following plugin to the CNI configuration in /etc/cni/net.d/10-bridge.conflist and reboot:

| |

Both of these IP addresses are hard coded and are dependent on the cluster configuration and the host IP, so when we expand to multiple hosts we’ll need to genericize this.

After this, all of my pods successfully came up with IP and all my pods were able to communicate successfully. However, ~12 hours later the routes on the mac0 interface get removed and all networking stops working.

| |

This seems to coincide when the host’s DHCP client renews the IP address for eth0.

| |

Apparently the DHCP system is clearing out the mac0 interface configuration. To fix this, we can run the following command:

| |

Unfortunately I don’t know of a good way to do this from Kubernetes or if this is necessary from non-RancherOS based host VMs. Leave a comment below if you have a better suggestion.

In the next post, I’ve revisited this and found out that MACvlan causes some problems with K8s service routing, so read through that.